Reduce API loading time: Never forget to Cache!

Kontinentalist serves quite numbers of traffic everyday, and thus user experience is really important for us. One of the UX factors apart… How did Kontinentalist reduce API loading time by using Cache

Reducing API loading time: never forget to cache!

We know that one way of increasing web traffic is by decreasing page load speed. So that was what the dev team set out to do.

The problem

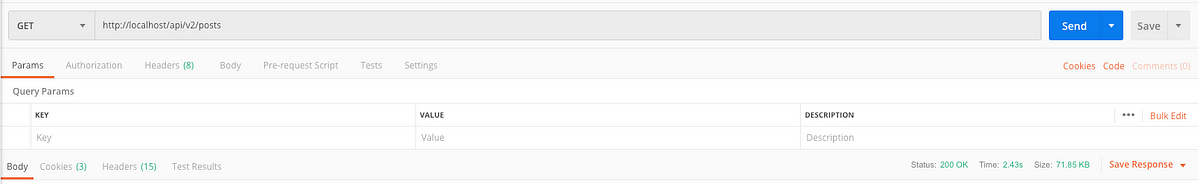

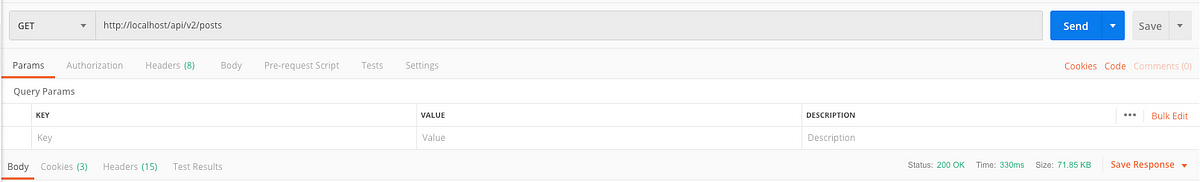

Before we could proceed, we had to investigate the extent of the problem. We did this by checking the API endpoint request loading time. Here’s the result from one of the endpoints:

From there, we figured that we’ll need to do something to improve the website performance from the API call loading time.

Sidenote: We are comparing from the localhost’s context.

When it comes to software performance, there are so many factors that come into play. I’m not going to go through the topic of software performance (perhaps another time? *pokes lead developer Dylan). But I’ll cover the factors that we considered.

Kontinentalist is built on top of an Interpreted Langauge: with every request, the script gets compiled at runtime for transforming the human-readable code into code that can be understood by the machine. This results in a slower loading time as a part of the execution time gets used during the process. So that’s already a problem.

We are also aware that the database is at the heart of the app. With each request, it runs queries to the database.

Finding the solution:

From the two problems above, we formed these hypotheses: what if the machine doesn’t need to recompile each request? What if we cut away the process at the compiling part? What if we reduce the frequency of database querying? Wouldn’t that help to boost the performance? But how?

The answer: Caching

Quoting from AWS:

Caching helps applications perform dramatically faster and costs significantly less at scale.

I’m not going to go through all the caching topics that exist out there, as it’s extensive enough to be a course by itself. Check out these online resources if you’d like to know more. Anyway, we applied caching at two parts; the compiling code part, and at the database layer. Saddle up because it’s about to get technical!

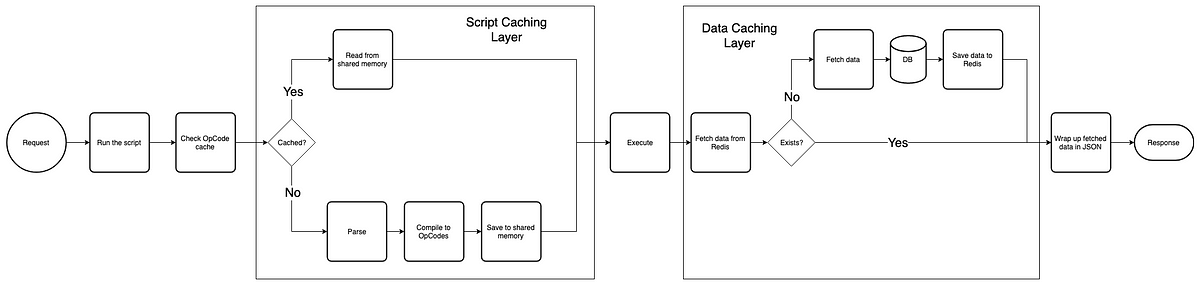

OpCache: We applied OpCache to store precompiled script bytecode in memory. This bytecode cache engine does compiling once during the first execution of a specific script file. Then the precompiled script gets stored in memory. So the next time a request is made, it doesn’t compile the scripts. Instead, it checks if the related precompiled script exists in the memory. If yes, it immediately returns whatever the script should do. Otherwise, it compiles and caches before the script returns the work.

Redis: We added a ‘data caching layer’ using Redis onto our app layers. Since Redis is an in-memory data structure, it reads data a lot quicker (check out DZone’s article on the benchmark of MySQL vs MySQL+Redis). The concept is about the same as OpCache. When a request that involves the database query is made, it checks if the data exists in Redis server. If yes, it returns the data straight away. If no, it fetches from the database and saves it to Redis server so that on the next request, it would get the data from Redis until the session expires.

Conclusions

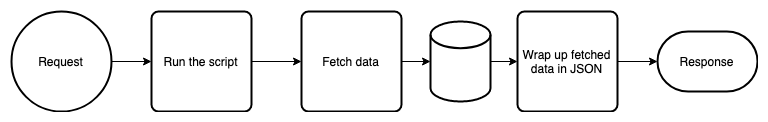

This is the flow of how the app handled a request without caching:

And this is how the app handles a request now:

It became more complex after we applied caching, but let’s see the result:

The loading time became seven times faster, from 2.43s to 330ms.

Isn’t it crazy? There are still several things we can improve on, but the beauty of software engineering is that it allows us to improve the product iteratively.

It’s clear now that caching is crucial for performance. Therefore, whenever you are ready to optimize your app, never forget to cache!